Amazon Smart Delivery Glasses

Overview

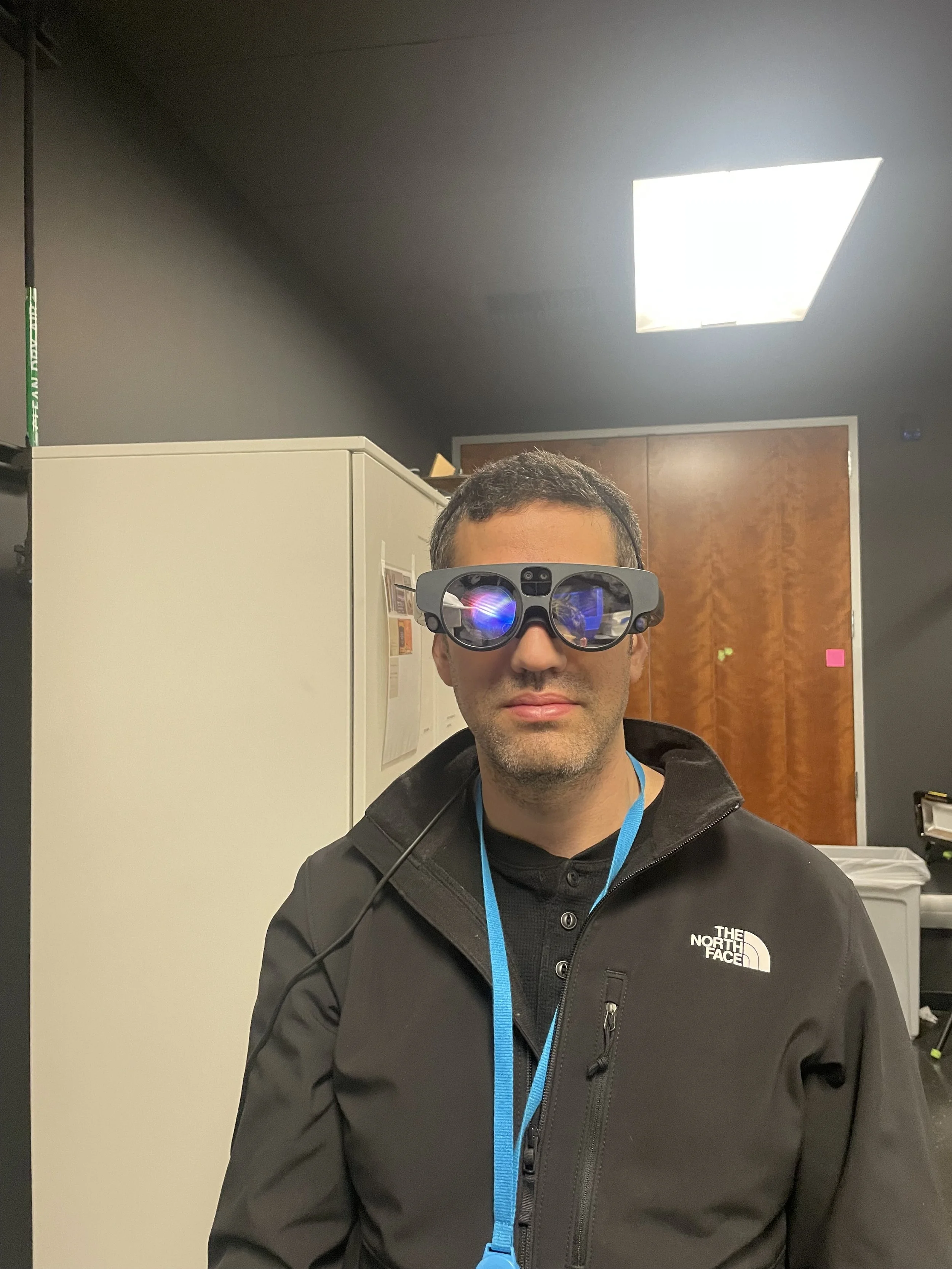

Amazon’s Smart Delivery Glasses allow drivers to navigate routes, scan packages, and receive on‑property safety alerts—without pulling out a phone. The glasses are lightweight, fit securely, and keep the wearer’s attention on the task and environment.

As Design Technologist & UX Engineer, I was the DRI for the input mechanism: defining the interaction model, building hardware prototypes, running UXR, and engineering the green‑monochrome UI system.

Challenge

Create an input that’s as fast as a phone, works with gloves in rain/noise/motion, and never fails in “performance mode.” Voice, gesture, and temple touch were ruled out for reliability and speed. The answer had to be tactile, glance‑free, and robust.

Constraints

- Must be faster than phone workflows

- Operable offline & in extreme conditions

- Eyes‑free, glove‑compatible

- Single‑color (green) display hierarchy

Early Exploration

I tested whether the phone could double as a controller: a D‑pad layout and a touch‑gesture surface.

UXR showed both were slower and error‑prone with gloves and movement. A dedicated tactile device was required.

Defining the Input Paradigm

Temple touch made users hunt for hotspots; voice failed in outdoor noise and across accents. I proposed a separate tactile puck controller to keep interaction reliable and eyes‑free.

Rapid Form Study

Cardboard + foam mockups established size, angle, and hand feel before electronics—surfacing accidental‑press risks when carrying boxes.

User Input Techniques - Figma

Creating a User interface has a chicken - egg problem, create hardware to follow Information Architecture or vise versa. I chose to create a Flow of Delivery Associates how to navigate the information.

Hardware Prototyping

I built five controllers:

Side Scroller, Joystick, Rotational Dial, Linear Up‑Down‑Select, and D‑Pad—to evaluate speed, accuracy, and muscle memory under UXR.

FOV Calibration (Magic Leap)

I built a calibration tool to align gaze and pentascopic tilt, render text at preset offsets, and let users choose their most comfortable FOV while walking. Results guided waveguide placement for production.

Refinement & Engineering Handoff

Hard detents to prevent over‑scroll

Recessed primary button to avoid accidental presses

Grip texture for glove compatibility

Impact

~2 seconds saved per delivery vs. phone

Validated tactile input architecture for Amazon AR wearables

Safety‑first: peripheral, glanceable UI reduces distraction

Established tactile interaction guidelines for future devices

Reflection

Designing input for smart glasses is designing for trust—between human reflex and machine response. As a Design Technologist, I used prototyping to collapse ambiguity. As a UX Engineer, I translated human factors into an interface that works at speed, in motion, and in the real world.